ChatGPT Health Could Fix Patient Engagement—But at What Cost?

My 2026 healthcare predictions are already off to a great start. My top AI prediction was that patient engagement tools would dominate 2026—and now OpenAI just announced ChatGPT Health, a new feature that lets consumers connect and interact with their health data.

I think this is great for patient engagement. But I can't shake the feeling that something about it feels… ominous.

In this article, I'll break down ChatGPT Health, explain why patient engagement will be the hottest niche in 2026, and explore how this new feature will impact patients, physicians, and the health system.

The Deets: ChatGPT Health

ChatGPT is already one of the most common places people go for health questions. OpenAI says over 230 million people globally ask health and wellness questions on ChatGPT every week.

So they’re formalizing that behavior into a dedicated product: ChatGPT Health, a separate Health “space” inside ChatGPT built specifically for health and wellness conversations.

What it is

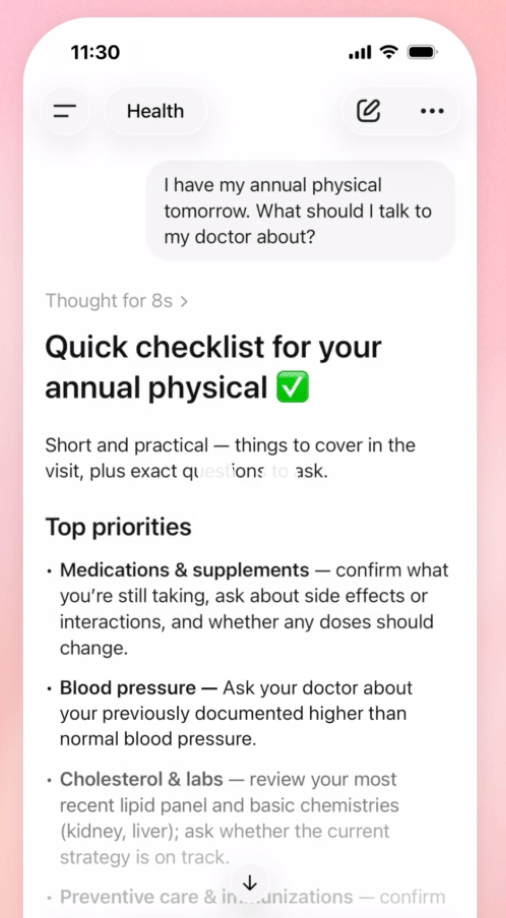

The headline feature is grounding the conversation in your own data. You can securely connect medical records (labs, visit summaries, clinical history) plus wellness apps like Apple Health, Function, Weight Watchers, Peloton, MyFitnessPal, and even Instacart so ChatGPT can help you:

make sense of test results

prep for appointments

spot trends (sleep, activity, weight, etc.)

think through diet and workout changes

even compare the tradeoffs of insurance options based on your patterns

OpenAI is explicit about positioning: this is meant to support medical care, not replace it (they must have a good legal counsel!). It’s “not intended for diagnosis or treatment,” but for helping people understand patterns and show up to medical conversations more prepared.

Example prompts they highlight:

“How’s my cholesterol trending?”

“Can you summarize my latest bloodwork before my appointment?”

“What questions should I bring to my appointment?”

ChatGPT Health Example

Privacy and security

ChatGPT Health lives in a separate, dedicated space within ChatGPT, with added protections designed for sensitive health info (OpenAI describes purpose-built encryption and isolation/compartmentalization).

The key line: conversations in ChatGPT Health are not used to train OpenAI’s foundation models. And if you start talking about health in regular ChatGPT, they’ll suggest moving the conversation into Health for those added protections.

I appreciated Sergei Polevikov’s pushback:

“Enhanced security” compared to what, exactly? And does “no training” also mean no fine-tuning?

Worth a read (after you’re done reading this article, of course): ChatGPT Health Is Dancing Around HIPAA. Well Played, Sam!

The Focus of 2026: Patient Engagement

Patient engagement is the biggest “hidden market” in healthcare because it’s basically the space between encounters (the weeks of confusion after a discharge, unanswered portal messages, medication lists patients don’t understand, the follow-up that never gets scheduled).

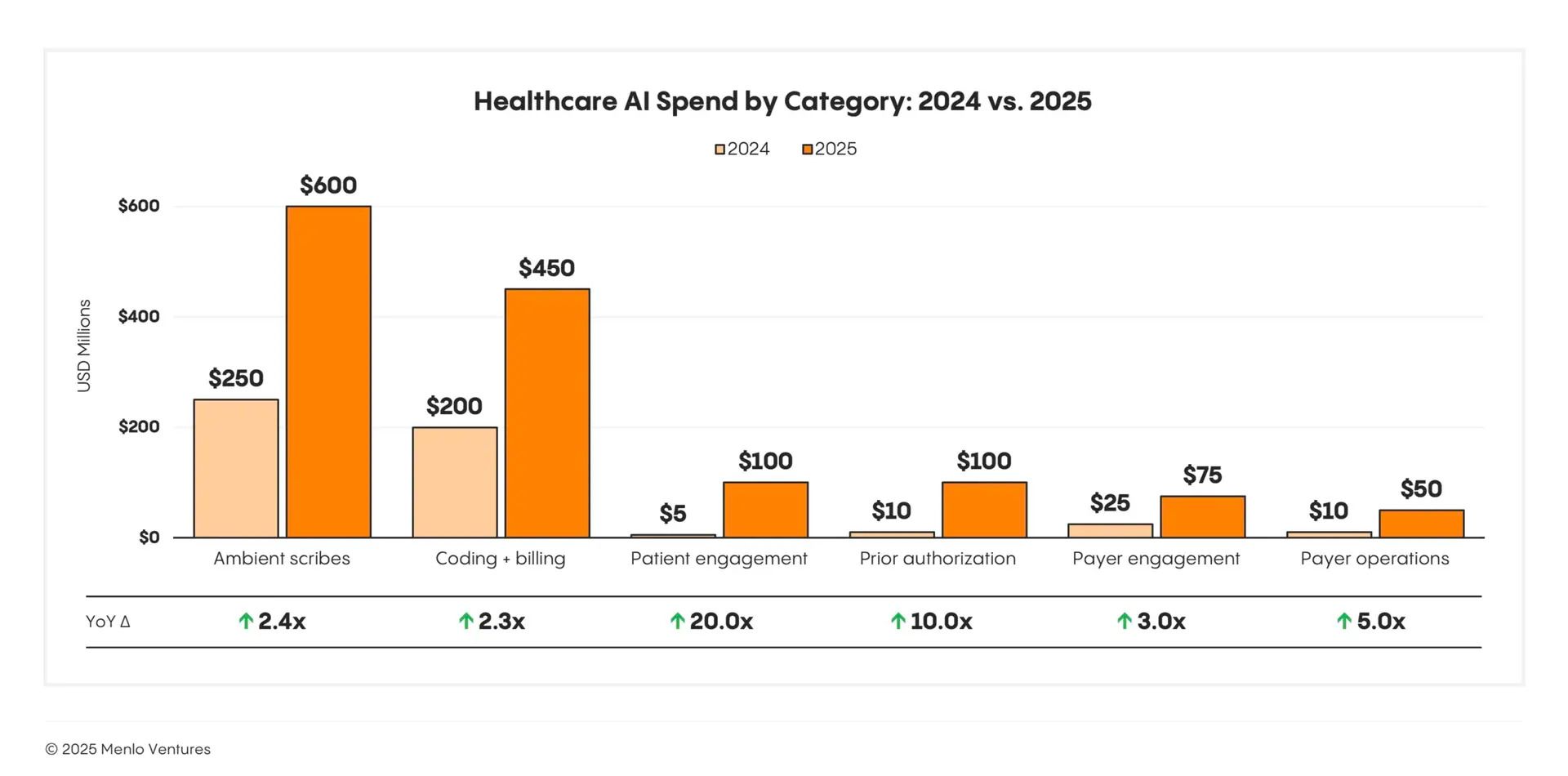

I’ve been writing about this for years, even when I wasn’t calling it “patient engagement.” In my 2026 predictions, I said this would be the hottest AI niche because it’s one of the only places where AI can do what humans can’t: provide consistent, 24/7, low-friction communication at scale.

My “$100B gap” piece made the core point: we spend an absurd amount of money on admin and access failures, yet software captures almost none of it. This is because engagement has historically been too labor-intensive to fix.

In that article, I broke “patient engagement” into four buckets (and they all map cleanly onto what OpenAI is trying to own):

Consumer wellness platforms (e.g., biomarker monitoring + lifestyle tracking)

AI triage (symptom intake + routing to the right level of care)

Scheduling automation (reducing no-shows + removing booking friction)

Care navigation (the post-visit “dead zone”: results, follow-ups, transitions, questions)

Source: Merlo Ventures

ChatGPT Health is OpenAI placing a massive bet that the new front door to “engagement” isn’t a portal or a care navigation app. Rather, it’s the conversational layer patients already use, now grounded in their actual labs, visit summaries, and wearable data.

Dashevsky’s Dissection

I'm cautiously optimistic about ChatGPT Health. It could meaningfully improve patient engagement—but it also creates a new layer between patients and clinicians that we should watch carefully.

Impact on patients

The best thing ChatGPT Health could do is shrink the core asymmetry in medicine. We, physicians, spend a decade learning pathophysiology and pharmacology. Most patients are trying to remember whether metformin is for blood pressure or diabetes. (FYI: if you're a patient reading this, you are not an "average" patient.)

If ChatGPT can help patients understand why they're taking meds, interpret trends (A1c, LDL, BNP, weight), and show up prepared with sharper questions—that's a win. Some examples:

"My A1c is down. Is that because of the meds, diet, or both?"

"What questions should I ask about this new cancer diagnosis?"

"What should I be asking before my colonoscopy or knee replacement?"

This is what I envision engagement should look like.

Impact on physicians

More engaged patients are generally a win. My communication with engaged patients and their families is always smoother and more productive. Shared decision-making is easier, medication adherence improves, and outcomes probably improve.

But there are tradeoffs.

I can foresee the creation of an "over-informed" patient who walks in with 25 AI-generated questions, half of which aren't pertinent to the actual problem.

There's also a more interesting upside: if ChatGPT Health is truly grounded in longitudinal data across records and devices (that I, as a physician at Hospital Huddle, don't have access to), it might surface things we didn't have time to see in a 15-minute visit.

The big risk is trust drift. Even if ChatGPT says it's not medical advice, some patients will treat it like it is. Convincing a patient that ChatGPT is wrong may be tougher than it seems. "I googled it" turns into "I ChatGPT'd it"?

Impact on health systems

If this improves adherence and follow-through, health systems should love it:

fewer readmissions

better med adherence

higher patient satisfaction

better outcomes

The ROI story becomes obvious (even though health systems aren't paying… yet).

But this is where my skepticism spikes.

Who's paying for this, and who's the customer? If the product is "free," we should be asking what the real business model is. (Behold the old adage: "If the product is free, you're the product.")

It's not hard to imagine a future where:

Health systems pay to be the "recommended" destination for certain conditions in a given geography. A patient asks ChatGPT about high cholesterol and gets referred to the nearby hospital that partnered with OpenAI.

Pharma pays to reach patients based on what they're asking (frankly, a terrifying thought). A patient searches for alternatives to losartan because of side effects and gets suggestions to try olmesartan, lisinopril, nifedipine.

And the privacy question doesn't go away just because someone says "enhanced security."

In summary, ChatGPT Health could meaningfully improve patient engagement by helping people understand their health data and prepare for medical conversations. But as with any "free" platform aggregating sensitive information, we should watch carefully who ends up paying—and what they're paying for. The best tools empower patients, and the riskiest ones commoditize them.